Major AI companies have been reluctant to share details for commercial and cybersecurity reasons, according to parties involved in the discussion. They are not compelled to disclose the information under any existing regulations.

AI Companies Reluctant to Reveal Secret Sauce

If companies choose to disclose the information, it would be the first time they reveal the secret sauce that regulators are desperate to understand. In June, London-based DeepMind and San-Francisco-based Anthropic gave the UK government permission to peek under the hood of some of its advanced algorithms, though details on how this would work in practice were not specified.

Anthropic said sharing weights, essentially how much importance the algorithm assigns to a certain data point, created cyber risks. Anthropic was founded by siblings who left ChatGPT maker OpenAI over safety concerns.

Instead of exposing weights, the company suggested giving the government the same level of access it granted customers through Application Programming Interfaces (APIs). DeepMind, a company that has made significant progress in synthesizing protein structures with AI, has reportedly not finalized details on how it will give governments access.

Granting access to models is a “tricky issue,” according to one government source.

“There is no button these companies can just press to make it happen. These are open and unresolved research questions.”

The UK government hopes to secure access to AI models before the November summit. Global leaders, civil servants, and academics will converge at Bletchley Park in Buckinghamshire to discuss the novel cybersecurity and bio-warfare threats that AI can perpetuate.

Bletchley Park is the English country estate where, during World War II, code breakers, including computer scientist Alan Turing, were based. Turing led the team that deciphered the Enigma code, shortening the war with German by several years and saving millions of lives.

The summit answered calls for more regulation following the quick progress of AI products and the chips that power them. Chip designer Graphcore, which is based in the UK, has developed chips specifically targeted at AI models.

UK Government Pushes for AI Rules While Courting EU

But is the UK government overreaching? What could be the reason for its eagerness to regulate the space?

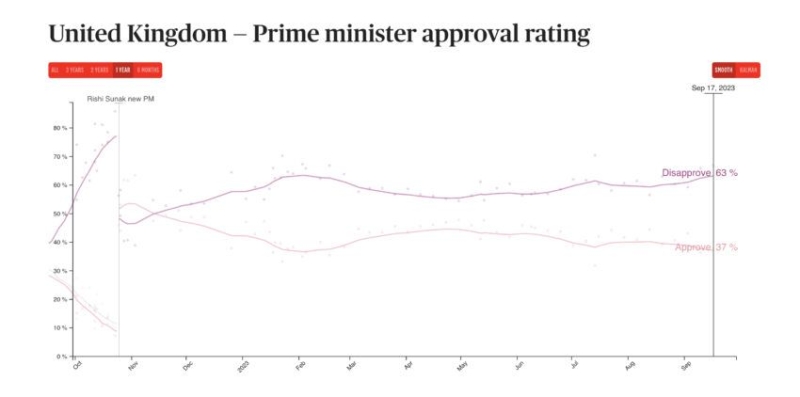

One reason could be the next general elections, which Prime Minister Rishi Sunak wants to hold in November 2024. By hosting the AI summit, Sunak could be looking to improve his approval ratings against the Labour Party’s Keir Starmer.

UK PM approval rating | Source: Politico

Sunak’s government has been working feverishly to restore ties with key European Union ties following Brexit. In addition to renewed ties to Belgium and France for financial services regulation and immigration, the UK recently joined the EU’s $90 billion-plus Horizon and Copernicus science research programs.

Despite the deepening ties, the UK wants to remain economically and scientifically distinct from the region. Sunak has only invited six EU leaders to the AI summit despite the region’s leadership on AI regulation that may go into effect before the end of the year.

Sunak’s Chancellor of the Exchequer, Jeremy Hunt, said the UK has invested much in research in the past year and pointed to the country’s speed in rolling out the COVID-19 vaccine as proof it is a force to be reckoned with. Hunt visited big tech leaders from some companies attending the summit earlier this month.